For some context, I love Taylor Swift. I grew up with her songs; she was the first artist I had listened to whose songs were in English, and I listened to them back in 2007 on my first school trip out of state. Sure, I had periods where I was embarrassed that she was my favorite artist, and then my roommate in PhD brought me back to being a “Swiftie”, as they say. But I never had disposable income when she toured (and unfortunately last year, visa issues made it so that I couldn't attend the last leg of her Eras Tour). So, I have never been to her concerts, ever. And then she announced her new album last month.

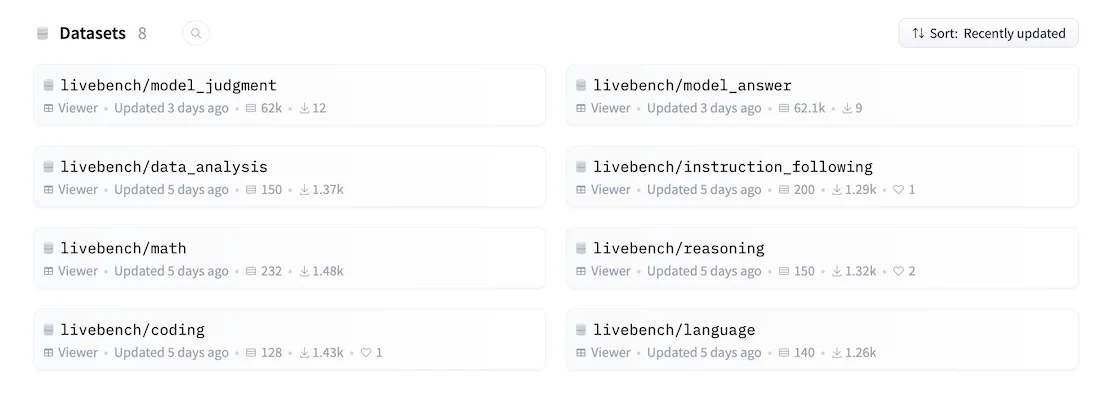

Today, I saw this post on Twitter showing the tracks and their lengths. And you might think, no big deal, what's the thing here. The thing is — the reason I wanted the Eras Tour so much was because it was a 3.5-hour performance. So, of course, I wanted to know how long this album is, if she ever ended up touring it.

01:20 PM · Sep 26, 2025 UTC ‼️| ‘The Life of a Showgirl’ tracks length!

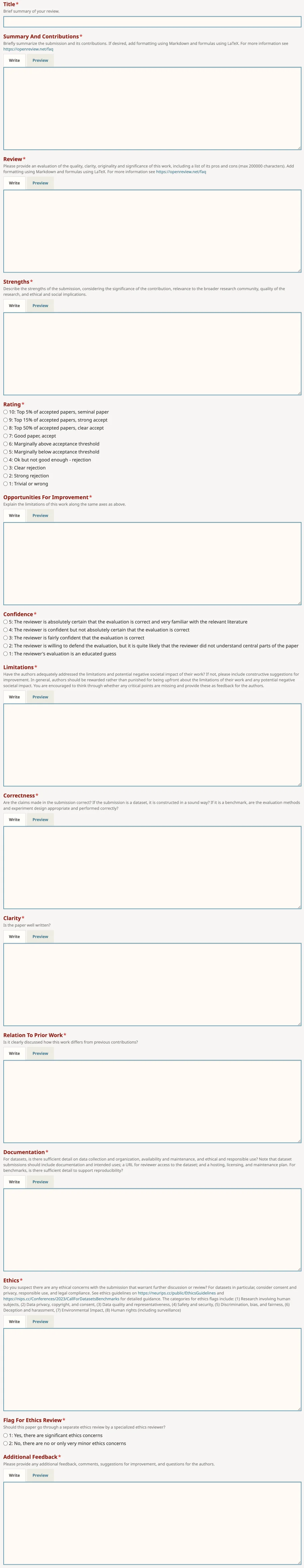

And so, I did what any person does seeing the tweet on the left: I used Gemini on screen, copied the text (missed the name of the first song, but got everything else), and pasted it into ChatGPT to ask what the length/duration of this album is.

And here is how that went (scroll through the code blocks to read the actual content)

Add these numbers and give me album length 3:46

Elizabeth Taylor

3:28

Opalite

3:55

Father Figure

3:32

Eldest Daughter

4:06

Ruin The Friendship

3:40

Actually Romantic

2:43

Wish List

3:27

Wood

2:30

3:31

CANCELLED!

Honey

The Life of a Showgirl (w/ Sabrina Carpenter)

3:01

4:01Got it — let’s carefully add these track times step by step to get the full album length.

Here are the times you gave me:

• Elizabeth Taylor → 3:46

• Opalite → 3:28

• Father Figure → 3:55

• Eldest Daughter → 3:32

• Ruin The Friendship → 4:06

• Actually Romantic → 3:40

• Wish List → 2:43

• Wood → 3:27

• CANCELLED! → 2:30

• Honey → 3:31

• The Life of a Showgirl (w/ Sabrina Carpenter) → 3:01

• (final track) → 4:01

⸻

Step 1: Separate minutes and seconds

Minutes = 3 + 3 + 3 + 3 + 4 + 3 + 2 + 3 + 2 + 3 + 3 + 4 = 38 minutes

Seconds = 46 + 28 + 55 + 32 + 6 + 40 + 43 + 27 + 30 + 31 + 1 + 1 = 340 seconds

⸻

Step 2: Convert seconds

340 seconds ÷ 60 = 5 minutes, remainder 40 seconds

So, add 5:40

⸻

Step 3: Add minutes

38 minutes + 5 minutes = 43 minutes

⸻

✅ Final Album Length = 43:40

⸻

Do you want me to also give you the average song length across these 12 tracks?coolGreat! Just for fun, let’s quickly calculate the average track length.

Total album length = 43 minutes 40 seconds → convert to seconds:

43 × 60 + 40 = 2580 + 40 = 2620 seconds

Number of tracks = 12

Average = 2620 ÷ 12 ≈ 218.33 seconds

Convert back to minutes:

218 ÷ 60 = 3 minutes, remainder 38.33 seconds → roughly 3:38 per track.

So the average track length is about 3 minutes 38 seconds.

If you want, I can also make a nice table showing all tracks with cumulative time so it’s easier to visualize the album flow. Do you want me to do that?I don't know if you've spotted the problem yet, because I hadn't until I saw this tweet.

01:06 PM · Sep 26, 2025 UTC 🚨 “The Life of a Showgirl” is officially 41 minutes long.

“The Fate of Ophelia” — 3:46

“Elizabeth Taylor” — 3:28

“Opalite” — 3:55

“Father Figure” — 3:32

“Eldest Daughter” — 4:06

“Ruin The Friendship” — 3:40

“Actually Romantic” — 2:43

“Wi$h Li$t” — 3:27

“Wood” — 2:30

“CANCELLED!” — 3:31

“Honey” — 3:01

“The Life of a Showgirl” — 4:01

Huh? How?

Because I thought it was 43 minutes 40 seconds long, and even if they had skipped out on the seconds (which spoiler alert, they did), it should still say 43 minutes.

At this point, I've used GPT5 (yes, the free version) for simple math enough that I trust its results. So, my first thought wasn't even that this calculation was wrong. I initially thought that Gemini's text extraction messed something up (other than omitting the name of the first song). Apparently not, all the track times seemed to be correctly extracted—and the messed up formatting didn't matter either, as GPT5 did arrange them correctly (even if the titles were incorrectly paired because of Gemini).

So, I added them on my own — and yeah, it is 41 minutes 40 seconds.

So, what gives?

Well, the separate minutes and seconds idea was great, but this part: Minutes = 3 + 3 + 3 + 3 + 4 + 3 + 2 + 3 + 2 + 3 + 3 + 4 = 38 minutes

Well, 3 + 3 + 3 + 3 + 4 + 3 + 2 + 3 + 2 + 3 + 3 + 4 is actually 36 minutes. That's where the extra 2 minutes came from. And I wouldn't have even cared to check or verify had I not come across this tweet.

So chat, what did we learn? That you still need to verify your pocket LLM calculator's results, sometimes even for basic math. Color me surprised.

I've been doing that this year pretty confidently — for example, pasting in a menu and asking what it would cost with Seattle tax to order these 3 items, or pasting a price list with complicated rules for times/weekdays/number of people and asking for the total cost in my situation, or pasting multiple Jupyter cell output results for benchmarks across 5 models and asking the model to create a table with a column for performance differences between the worst and best model and the percentage difference.

This situation just reminded me that I still need to verify the basic math output — I honestly didn't expect that to be the case.

Cite This Page

@article{jaiswal2025youstillneedtov,

title = {You still need to verify your LLM calculator results},

author = {Jaiswal, Mimansa},

journal = {mimansajaiswal.github.io},

year = {2025},

month = {Sep},

url = {https://mimansajaiswal.github.io/posts/you-still-need-to-verify-your-llm-calculator-results/}

}

.COwEhZCr_Z2w7JXT.webp)

.AnrNMlC1_Z2kBM10.webp)