Another summer, another round of paper reviewing issues. I kinda just wanted to record this twitter conversation somewhere, so that I have someplace to point people to when they ask me what I want to be changed in reviewing.

My hot take is reviews should be a single text box. Asking people to fill in 10 text boxes for a paper increases the chances that no one wants to do it.

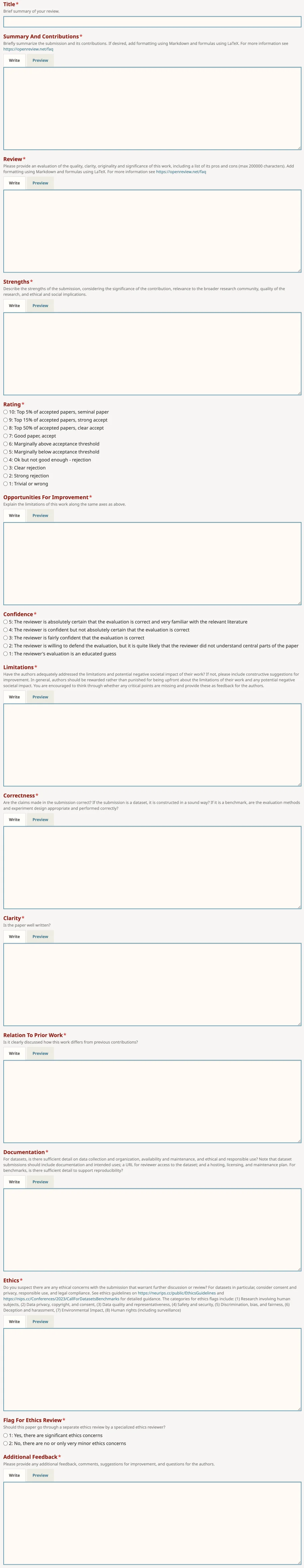

Especially looking at NeurIPS Datasets & Benchmarks, why does it have NINE text areas other than "Summary And Contributions" and "Review".

ACs have said that it allows inexperienced reviewers to decompose the review.

I guess it is the way I review which makes it really hard to do this format. I feel guilty about writing a single line in the textboxes there, but I review line by line on ipad (similar to overleaf comments, just in pdf format) and would very much prefer to be able to extract those and just paste that in. For example, I often write “typo: fix this word” or “cite X here, which negates the results you are obtaining, talk about why that might be the case” inline. Extracting this into separate content areas (especially with overlaps in purpose) feels really overwhelming.

Yanai here makes a point about highlighting global, aka, main strengths/weaknesses of the paper. And that, eventually these are the things the AC should focus on, and reviewers should make it easier for the AC to detect these.

And I do not disagree, but for a review load of >4 in a month, I end up dreading the second pass requirement, instead of being able to actually provide the review. I have always wondered if there's an opportunity to run reviewing experiments in newer conferences like @COLM_conf where people can review the paper inline like @hypothes_is/@askalphaxiv, and you tag comments with their kind (suggestion, missing etc) -- would that improve the reviewing culture? It would still be easy for AC’s to filter out the main global points based off tags, but it doesn’t require a second pass? As a cherry on top, it is also hard to do unlike the GPT based summary reviews of the paper?

Something I did not mention in that conversation is, I often want to extract these highlights, and ask GPT/Claude/LLM of the month to separate them out into categories to put into those text areas — but the outcome doesn’t ever sound natural; and there are always slight hallucinations that are difficult to catch. So, if that doesn’t work as well, maybe we try to change the review UX itself?

Cite This Page

@article{jaiswal2024pleasedonthave1,

title = {Please Don’t Have 10 Textareas in Review Forms},

author = {Jaiswal, Mimansa},

journal = {mimansajaiswal.github.io},

year = {2024},

month = {Jul},

url = {https://mimansajaiswal.github.io/posts/please-dont-have-10-textareas-in-review-forms/}

}