Over the past three years, starting in 2021, I've had multiple ideas about the best ways to evaluate certain processes we currently do with LLMs. Whether it be general evaluation on one single task, multi-run evaluations, theory of mind evaluations , or something like RAG where you're retrieving over a set of documents, I've always had those ideas and really wanted to implement them.

I think the problem I keep getting stuck on is that data sets don't actually make sense to me. Sure, there's a lot of conversation about how many of these data sets are useless and companies need private data sets, etc. But you're publishing a paper on companies' private data sets, you're publishing it on public data sets, and so many public data sets right now are very much just generated by large models. That makes no sense to me because you're using them to evaluate these large models.

Every single time I “look at the data”, the thing that everyone asks you to do, and the thing that I've been doing throughout my PhD, I find it disappointing. Then I go into this realm of "Oh, so I should create it myself," and that is something that I really like to do. I have done it before, but I think the thing that is holding me back is this idea of how many data samples the data set needs to have for it to be a viable data set to be published, and for the research that is done using that data set to be published and be useful.

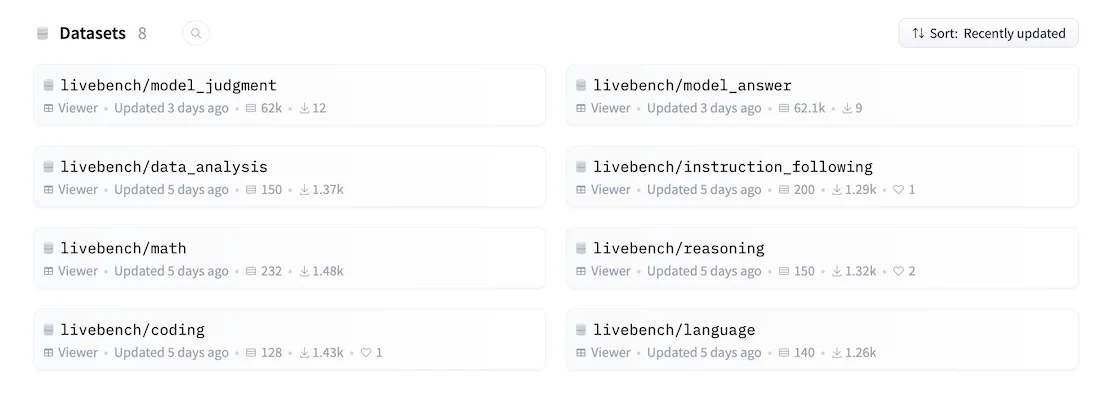

I think I have been inflating the number in my head, whereas some of the data sets that I like are barely 500 samples. Those that I like recently are like 100 to 150 samples. I think I need to change my mindset about creating a data set and putting it out there. If I'm running experiments on it and putting the subsequent publication out there, it doesn't need to be a data set that has thousands of samples.

I do have an eye for good data and good data curation. I should be able to create a good data set that is just 150 samples and is still a viable prospect in its usage and tells something useful about the model that we are using it on.

Cite This Page

@article{jaiswal2024iamstuckinaloop,

title = {I am Stuck in a Loop of Datasets ↔ Techniques},

author = {Jaiswal, Mimansa},

journal = {mimansajaiswal.github.io},

year = {2024},

month = {Aug},

url = {https://mimansajaiswal.github.io/posts/i-am-stuck-in-a-loop-of-datasets-techniques/}

}